Preventing the site from being indexed by search engines is a feature that is often used when building a site for a client because we don’t want Google to index it until it is ready.

Preventing indexing in this case is necessary because the site is in the development phase and the links of the pages can change (for example when the theme still has demo content 🙄), in such cases we do not want to deal with 301 redirects.

Internal site blocking

Another use case where disabling search indexing is internal websites, many businesses have an internal website for employees such as an employee portal or a closed forum. In such a case we would not want it to be displayed in search engines at all.

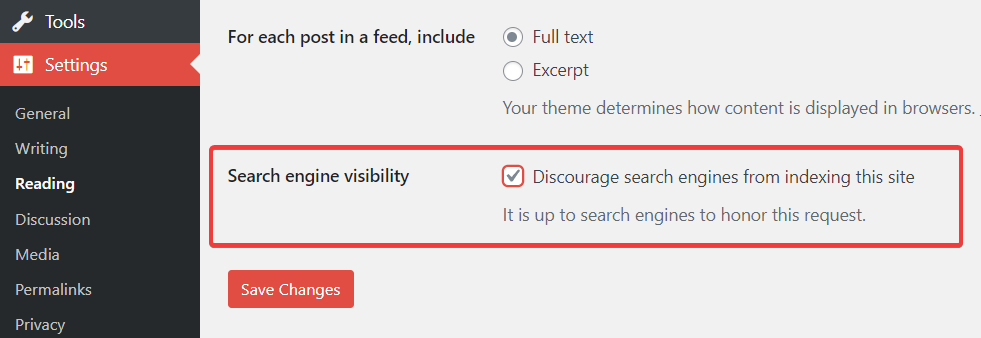

WordPress has a built-in feature that allows you to specifically disable search indexing.

Go to Settings > Reading and check the “Search engine visibility” option then save the setting.

When you save this setting, WordPress adds the following meta tag to pages:

<meta name='robots' content='noindex,nofollow' />If you want to specifically block some user agents, for example just Google or just Bing, you can create a robtots.txt file in your website directory.

robots.txt file allows us to instruct all bots or specific bots whether to index the site or prevent indexing of the entire site or parts of it.

A basic example of robots.txt file that blocks site-wide access to all user agents (bots):

User-agent: *

Disallow: /We can also use this file to limit search engines from indexing unwanted sections of the website, for example, the WordPress core files.

This will block access to WordPress folders but will leave the website and all its pages indexable.

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /wp-content/plugins/

Disallow: /wp-content/themes/

Allow: /